Posted on October 6, 2018

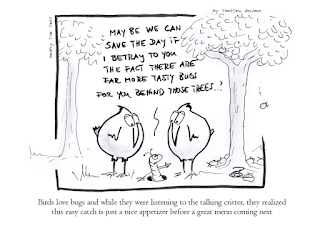

Birds love BUGS

Here are the stats. I raised 2500 bugs in 12 years, then moved to a company where I raised a thousand bugs in only 19 months.Presuming that a typical year has 252 working days, this gives me rate of 2.5 bugs per day or 12 per week (compared to an average 0.8 per day or 4 per week during the last 12 years).

That means the rate of identified defects has increased by the factor of 3.

What do these numbers tell about me or the software-under-test, or the company and what does it tell about the developers who introduce these bugs?

Do these numbers really have any meaning at all? Are we allowed to draw a conlusion based on these numbers without having the context? We don't know which of these bugs were high priority, which ones weren't. We don't know which bugs are duplicated, false alarm and which of those look rather like they should have raised as a change request.

We also don't know what is the philosophy in the team. Do we raise any anomaly we see or do we first talk to developers and fix it together before the issues make it into a bug reporting system. Do we know how many developers are working in the team? How many of them work really 100% in the team or less, sporadically, etc...Also, does management measure the team by the number of bugs introduced, detected, solved or completed user-stories, etc.? May the high number of identified issues be a direct effect of better tester training or are the developers struggling with impediments they can/cannot be held responsible for and these bugs are just a logical consequence of these impediments? Are there developers who introduce more bugs than others?

As is with these numbers, they are important, but they serve only as a basis for further investigation. It's too tempting to use these numbers as is and then draw one's one conclusions without questioning the numbers.

(Source: Simply the Test)

Recent Comments