Posted on November 13, 2018

Sprint Review #37

by Cyndi Cazón

[Crispin - Agile Testing]

(Source: Simply the Test)

Posted on November 11, 2018

Gung-Ho Tester

by Cyndi Cazón

ThanX. I never met someone who made a drawing about me. I really enjoyed having this moment of conducting a little self-mockery.

And this is the original sketch from Cindy:

(Source: Simply the Test)

Posted on November 4, 2018

Analyzing Lenny’s flight paths

by Cyndi Cazón

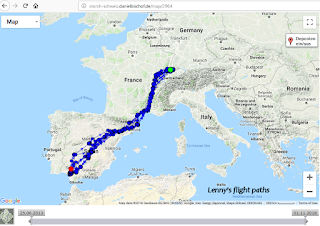

We are tracking the routes of animals all over the world. Some birds have already become famous like Lenny the stork whose annual airtrip to Spain and back to his home at Basel Zoo is observed by over 1500 fans. Lenny has a transmitter and everyone can check online when he starts his journey from Switzerland to South Spain where he spends a few months before he returns.

We are tracking the routes of animals all over the world. Some birds have already become famous like Lenny the stork whose annual airtrip to Spain and back to his home at Basel Zoo is observed by over 1500 fans. Lenny has a transmitter and everyone can check online when he starts his journey from Switzerland to South Spain where he spends a few months before he returns.His routes [lenny] are recorded since 2013 and each time he starts his way back to Basel, the local news are celebrating it with corresponding articles [lenny2]. People are desperately awaiting his arrival near February/March and are fascinated by the fact he returns to the same nest every year. He also meets the same old girlfriend to enlarge his family. What a faithful and diligent bro.

I wonder what Lenny thought of us bird-stalkers if he knew that each day of his life is tracked as a spot on a map that many people find so exciting. He'd probably bring us to court, took off the transmitter or at least got himself unsubscribed from all social media accounts.

Maybe he'd become more vain and demonstrate that he could fly further south to Africa like others and/or his antecessor did. He'd probably also felt ashamed when we watch him stop at all these fastfood rest places which are nothing else but garbage dumps. Or, he could be a little rascal like in the cartoon - with a big portion of humour. But since he doesn't know he's getting observed, he blithely goes on doing what many others do until a yet unknown trigger in his head tells him to go back home.

It's is not only the incredible flight-paths that I find so fascinating, it is also sad and heartrending if you see that almost every second of the ringed storks do not survive. The datalogger web page [logger] keeps a record of each stork with his/her name and if you read their fates, you will learn that some don't even manage to accomplish a single trip. They die by flying into electric power lines, wind wheels, get shot or perish because they ate wrong or morbit things. These are all causes of death which are not natural.

But that's not really what I was about to talk. While digging deeper into Lenny's flight routes, I got a lot of questions. Why do these storks no longer fly to Africa and instead stay in Spain? How do they know where to go and how do they find their way back to their original nest they grew up? When do they start going South and how do they know it's time to go back?

Scientists have raised the same questions about the truncated routes and they have come up with two theories. The first one is the fact that Swiss storks are the result of a resettlement project that started with Algerian storks, a species that is not used to fly the same long distances as the original domestic storks.

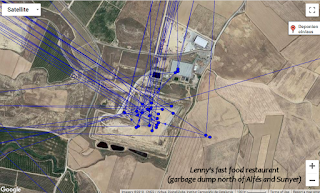

The route they are taking now pretty much matches the distance to what their relatives are "programmed for" or what they can perform. The second theory is less pleasant. Spain is full of garbage dumps where they find enough food. If you follow the markers of Lenny and then switch from the "map"-view to "satellite"-view, it is really appaling to see where he likes to rest and eat. I feel sorry for him and his friends. These garbage dumps seem to be another reason why there is no need to search for food further south.

These are fair explanations and I can live with those very well. But I could not find any clear answer to all my other questions like how do they remember which route to take and when? Explanations in scientific articles range from "inner-clock", "genetically programmed to go South with no plan" to "orientiation by the Sun", "stars" and even "magnetic fields" or "orientiation by the landscape".

I am a software tester and if I am given similar amounts of illustrations from a software developer whose pieces of sofware don't work, then my conclusion normally is that one simply doesn't know.

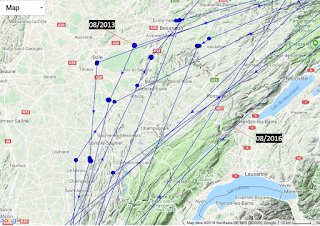

If you zoom out then it looks like Lenny takes the exact same route every year. But if you zoom into Lenny's flight path, I see that his first 2 routes to Spain went over the region of Burgundy in France while the last 2 years he took a route which was 100 km East of the first routes. This doesn't really look to me as if he strongly remembers each hill and course of a river but it still looks like he follows a main path from which he takes small excursions.

When Lenny headed South the very first time, he took a shortcut over the Mediterranean Sea (08/2013); a route which he never took again in the subsequet years on his way South. But he used it regularly on his way back north except for 2016 and 2017. That's interesting, 'cause big birds generally don't like to fly over the sea. They have less natural draft. It costs them more energy to fly. So, what happened the first time that he never took that route again? And why did he find that route more attractive for his return to Switzerland? Did he follow and trust less experienced storks? Probably not, because at the time he got "chipped" he was already an experienced 10 year old stork. May weather conditions or seasonable winds play a role which make it more economically to fly over the Sea at some time of the year? Did he learn about dangerous places which he tried to avoid next time? Is this aberration from the main route broadening his skills and make him a real travel expert over time by learning on the fly and really remember all these things?

Now we have our link to software testing. Isn't there an analogy to the process of following a strict testscript vs. taking some detours? Isn't it more successful to find bugs by making some extra tours from your documented test steps? What if we don't enter the prescribed number 9481 in the calculator but do something different and watch what happens next? What if we use an alternative way to trigger the same software function/operation? I can enter a text by typing or by pasting from the clipboard into an edit field and I swear to you it is not the same! Isn't this habit of taking detours exactly what turns an ordinary amateur "checker" into a professional software tester?

As a software tester you have to activate all your senses and over time you get a much better feeling where things can go wrong. No book can teach you that. There are interesting ones out there like those from James Whittaker but it's nothing like experiencing these tours on your own [whit]. And what about subject matter experts? They are like the elder storks. You would be dumb not to take their advice. They may not be the best testers, but they might lead you to very interesting places you had never been before.

Sources:

[lenny]

http://storch-schweiz.danielbischof.de/map/2964

[lenny]

http://storch-schweiz.danielbischof.de/map/2964

[lenny2]

https://www.srf.ch/news/regional/basel-baselland/heimkehr-aus-dem-sueden-storch-lenny-ist-im-anflug

https://www.srf.ch/news/regional/basel-baselland/heimkehr-aus-dem-sueden-storch-lenny-ist-im-anflug

[logger]

https://projekt-storchenzug.com/datenlogger/

[whit]

Exploratory Software Testing by James Whittaker

(Source: Simply the Test)

Posted on November 1, 2018

Story Points at Coco Beach bar

by Cyndi Cazón

Although story points have nothing to do with scrum (invented by Mountain Goat Software), many companies use these to estimate their tasks. Story Points are a bit special because they don't tell you anything about how long a task takes, but rather how complex a task may be to complete. In theory, a task can be very trivial and still take longer because it may invole a lot of monotonous activity to complete the task. On the other hand, a complex task can be completed within an hour or day depending on the skill of the one who is implementing it.

Although I have worked with Story Points for the past 10 years, I am still swinging between two different mindsets. I still can't decide if it is a cool tool or just a buzzword. The paradox of this measurement is the fact that on one hand you don't measure how long you have for a task, but you still do it indirectly because you take these SP numbers to fill the sprint. This is per se exactly the same as if I measured the hours I have for a task, because we fill the sprint only with a limited number of hours/days available. But, story points help you understand much faster when a task gets too complex. Since you are using fibonacci numbers, you get alerted right away if you have a task estimated higher than 8. There is no 9, no 10, the next number is 13. This huge jump is a great warning sign and leads you to rethink the size of the story.

(Source: Simply the Test)

Posted on October 27, 2018

Agile cocktail bar

by Cyndi Cazón

Refinement: in a Refinement Meeting, the business analysts introduce the desired features (= user stories), and the developers estimate how much time they will need to build it. If you are lucky, you deal with an experienced team and the estimation of each story is a matter of minutes. At the worst case the estimation is based on limited background information or even total disorientation on what is really behind these stories.

Weird thing is that some companies prefer the term "Grooming" over "Refinement" although I recommend not to use it. Grooming is another word for child abuse...just be aware of that.

If one realizes that a desired story is too complex to be done within a reasonable time-frame, it must be stripped down into smaller stories until each of them fits. Even during a sprint you may deal with the situation that certain tasks of the story cannot be implemented in time and therefore require business analysts to further breakup the story into smaller pieces. The more stories requiring refinement the higher probability of losing overview of the great picture. At one point in time you may not see the wood for the trees.

Sprint Planning: The team discusses the goal of the next sprint; which is the tasks and user-stories that need to be implemented. Some now detect that the estimation of some user-stories is too low and correct those on-the-fly, so one cannot add too many stories to the sprint. In order to understand what you can put into a sprint, you also need to know your capacity. If you are new to agile processes, you can't know the team's capacity. Usually you will learn after a few sprints what is the typical team's capacity.

I've been at a company where the planning was a matter of 20-30 minutes while at another we had endless discussions and calculating velocity etc., then everyone agreed although it was already clear there was little hope one really manages to complete these tasks.The only group of people who believed they meet the goal were the managers.

Sprint: Usually a 2-4 week work phase where one implements what was agreed in the sprint planning.This is also the phase where some people realize they wanted to go on holidays and just didn't say anything before. Others realize they go to a workshop, yet some others

become ill. At one company it was common to regularly check whether ot not the sprint goal could be met. If there were indications a task could become too big or other tasks could become more important, it was normal to take tasks out of the sprint and re-prioritize. In another company the sprint planning was seen as a strong agreement between the product owner and the team and it was much harder to take things out.

Daily: In a "Daily", the entire team meets for a 15-minute briefing. Each individual has a minute or two (depending on the team size) to explain what you have done yesterday and what you are planning to do today. For people who are not familiar with this kind of briefing (especially when they are new to agile processes), such meetings can be mis-understood as an awkward instrument of micromanagement. As a result, during the briefing they try to convice others how hard they worked and that they will work even harder today.

Others are so enthusiastic and would like to have the complete 15 minutes just for themselves. As a result, one rarely finishes in time. Some of the team-mates have no clue what others are talking about, others polish their nails or check the latest news on their mobile phones or make notes for the next meeting where they will do the same for yet another meeting. From a good friend I heard their team had to do planks while they were speaking. This helped guarantee they didn't talk too much. An external consultant once suggested to snap off the meeting after 15 minutes regardless whether all team-mates had a chance to talk. I think such advice is ridiculous and counterproductive in regards to building up a motivated team.

Burndown-Chart: The burndown chart is a two-dimensional graph designed to track the work progress during the sprint. In the beginning you have a lot of work in TODO and near the end ideally nothing should be left to do. At the best case, the graph shows a nice consistant stair going down from top left to bottom right. However, reality is sometimes different. The graph often shows a straight line without any indication of movement until shortly before the sprint ends. The graph then looks like the path of an airplane that all of a sudden disappears from the radar. The poor guy is the tester who gets thrown half-done tasks over the wall to test in zero left time-frame.

Sprint Review: That's like payday. Developers demonstrate the outcome of the sprint. This is usually the day when we see a lot of astonished faces.

Retro (retrospective): After a sprint, all team members have the opportunity to comment on what went well and what didn't. From this, measures will be taken for the next sprint. Don't be surprised if after the meeting, people have already forgotten what they just discussed and agreed on.

Story Points: Although story points have nothing to do with scrum (invented by Mountain Goat Software), many companies use these to estimate their tasks. Story Points are a bit special because they don't tell you anything about how long a task takes, but rather how complex a task may be to complete. In theory, a task can be very trivial and still take longer because it may invole a lot of monotonous activity to complete the task. On the other hand, a complex task can be completed within an hour or day depending on the skill of the one who is implementing it.

Although I have worked with Story Points for the past 10 years, I am still swinging between two different mindsets. I still can't decide if it is a cool tool or just a buzzword. The paradox of this measurement is the fact that on one hand you don't measure how long you have for a task, but you still do it indirectly because you take these SP numbers to fill the sprint. This is per se exactly the same as if I measured the hours I have for a task, because we fill the sprint only with a limited number of hours/days available. But, story points help you understand much faster when a task gets too complex. Since you are using fibonacci numbers, you get alerted right away if you have a task estimated higher than 8. There is no 9, no 10, the next number is 13. This huge jump is a great warning sign and leads you to rethink the size of the story.

(Source: Simply the Test)

Posted on October 8, 2018

Banksy was here

by Cyndi Cazón

Sotheby's in London has auctioned off a framed version of Banksy's iconographic subject "Girl With Balloon" for over 1 million pounds.When the final bid was made, the big surprise came. Suddenly the screen moved down and the picture was destroyed by a shredder built into the picture frame [nyt]. Right after the surprise, the anonymous artist had published a video detailing how he installed a shredder into the frame [ban].

Sotheby's in London has auctioned off a framed version of Banksy's iconographic subject "Girl With Balloon" for over 1 million pounds.When the final bid was made, the big surprise came. Suddenly the screen moved down and the picture was destroyed by a shredder built into the picture frame [nyt]. Right after the surprise, the anonymous artist had published a video detailing how he installed a shredder into the frame [ban].The disturbing part of this story is that this picture has probably gained even more fame through this action and thus very likely becomes more coveted although shred in pieces; volitional or not, Sotheby's to be in the known or not, the buyer well-informed or not....who knows.

Sources:

[nyt]

https://www.nytimes.com/2018/10/06/arts/design/uk-banksy-painting-sothebys.html

[ban]

https://www.instagram.com/p/BomXijJhArX/?utm_source=ig_embed&utm_campaign=embed_video_watch_again

(Source: Simply the Test)

Posted on October 6, 2018

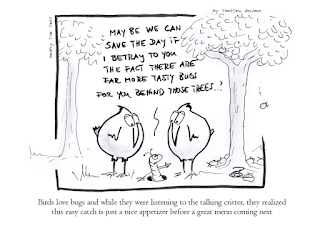

Birds love BUGS

by Cyndi Cazón

Presuming that a typical year has 252 working days, this gives me rate of 2.5 bugs per day or 12 per week (compared to an average 0.8 per day or 4 per week during the last 12 years).

That means the rate of identified defects has increased by the factor of 3.

What do these numbers tell about me or the software-under-test, or the company and what does it tell about the developers who introduce these bugs?

Do these numbers really have any meaning at all? Are we allowed to draw a conlusion based on these numbers without having the context? We don't know which of these bugs were high priority, which ones weren't. We don't know which bugs are duplicated, false alarm and which of those look rather like they should have raised as a change request.

We also don't know what is the philosophy in the team. Do we raise any anomaly we see or do we first talk to developers and fix it together before the issues make it into a bug reporting system. Do we know how many developers are working in the team? How many of them work really 100% in the team or less, sporadically, etc...Also, does management measure the team by the number of bugs introduced, detected, solved or completed user-stories, etc.? May the high number of identified issues be a direct effect of better tester training or are the developers struggling with impediments they can/cannot be held responsible for and these bugs are just a logical consequence of these impediments? Are there developers who introduce more bugs than others?

As is with these numbers, they are important, but they serve only as a basis for further investigation. It's too tempting to use these numbers as is and then draw one's one conclusions without questioning the numbers.

(Source: Simply the Test)

Posted on September 26, 2018

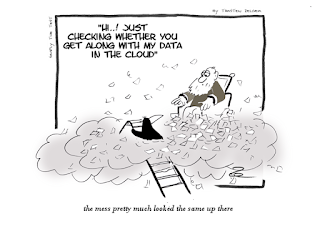

Checking the Cloud

by Cyndi Cazón

and here how the first draft looked like...

(Source: Simply the Test)

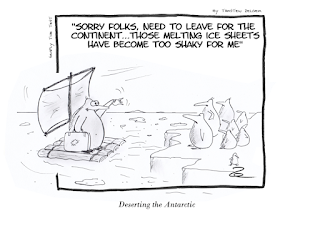

Posted on September 20, 2018

Deserting the Antarctic

by Cyndi Cazón

(Source: Simply the Test)